Portainer Docker Swarm GUI

Update: 2021.07.05: Updated to Portainer-ce 2.6.0 2.6.1 (2021.07.17)

Today saw the deployment of Portainer 2.6.0 2.6.1. The necessary steps are outlined on the Portainer update

page. Along with the update a dependency on hub.docker.com was removed requiring a slight hacking of the deployment .yml file. The objective was to modify it to use the local registry instead of the wider internet repo.

As the cluster is multi architecture (AMD64, ARM64 and ARM32) a composite "image" that can load on any of the architectures had to be cobbled together. This required the use of the newish docker manifest commands. There were a couple of gotchas along the way. Here's what worked.

- Download all the images for each architecture onto a single machine (which was done with the aid of the local repo accessible from at least one machine of each type. The local repo is now using the official docker repository image (which was a rabbithole upgrade of sorts)).

- Use the "docker manifest create" command naming downloaded images.

- If your environment has neglected to issue local certificates and runs an "insecure" local repo, then as well as having the insecure options setup in the configuration for docker on each node, the commandline option --insecure must be added to the manifest commands.

docker manifest create --insecure <manifest-name> <image.arm32> <image.arm64> <image.amd64> - Delete the images, push the manifest

docker manifest push --insecure <manifest-name>

Change the portainer-agent-stack.yml like so (note:only the agents were doneall done 2021.07.17):

version: '3.2'

services:

agent:

image: registry:5000/portainer/agent:2.6.1

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- /var/lib/docker/volumes:/var/lib/docker/volumes

networks:

- agent_network

deploy:

mode: global

placement:

constraints:

- "node.platform.os == linux"

portainer:

image: registry:5000/portainer/portainer-ce:2.6.1

command: -H tcp://tasks.agent:9001 --tlsskipverify

ports:

- "9000:9000"

- "8000:8000"

volumes:

- portainer_data:/data

networks:

- agent_network

deploy:

mode: replicated

replicas: 1

placement:

constraints: [node.role == manager]

networks:

agent_network:

driver: overlay

attachable: true

volumes:

portainer_data:

After successfully creating the composite "manifest", which behaves rather like a single image, each node downloads its relevant image for that architechture. All nodes, which are subject to the global deployment, label the newly deployed agent containers homogeniously even though they are running on different architectures. (Obviously this opens up the general possibility of doing power saving by migrating containers on and off smaller nodes in much the same way your mobile phone uses BIG/little CPUs).

Update: 2020.09.07: Moved to Portainer-ce 2.0.0

Moved over to the newer CE portainer. Looks identical to the older 1.24-1 edition. All my saved settings were gone, so had to reset everything. No big deal as most things were saved. The LDAP settings seemed to be much less painful than the previous version. Things just worked.

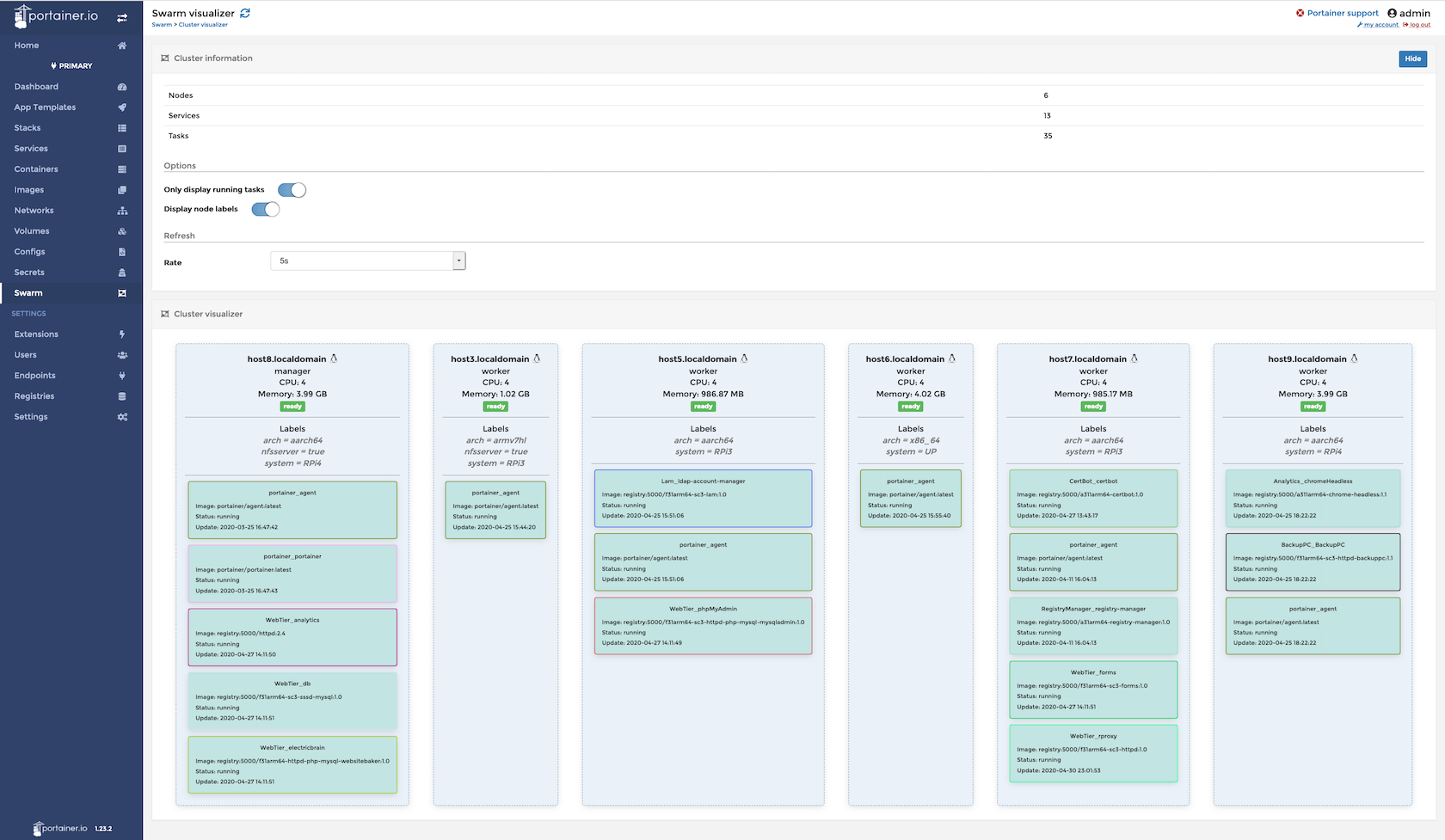

Portainer is a web based gui written in Angular. It's role in life is to transform what could easily become a rats nest of hardwired interconnected docker containers into a graceful easily managed cohesive system.

The quality of the code is evident from the outset. It's certainly not hard to imagine business grade deployments running quite significant workloads reliably. Portainer, the company, offers various levels of support subscriptions should the software become embedded in your computing environment.

The version used here at electricbrain is Portainer CE or community edition. The license is ( quoting from Protainer's web site) "Provided as open core software under the zlib license, Portainer CE is 100% free and its source code is freely available on Github."

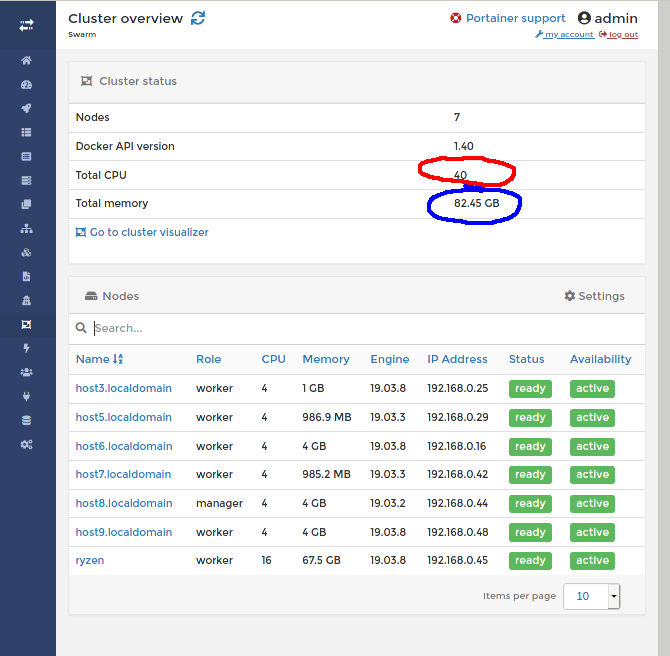

With the addition of the latest RPi48GB the over cluster has now passed 100GB of RAM and 48 CPUs.

Docker swarm has the ability to intergrate different CPU architecture nodes into the cluster. Here at ElectricBrain the cluster currently has AMD Ryzen 3700X 16 vCPU, Intel i7 8 vCPU, Intel Atom x5-Z8350 4 vCPU, ARM64 (ARMv8) 4 CPU and ARM32 (ARMv7) 4 CPU architechure machines forming the nodes.

The cluster now has 3 nodes acting as managers with one being elected as the leader. It appears that Portainer's master controller like to sit on the Swarm's leader node. A minor issue related to the election process is that any time the leader flips over to another node Portainer is restarted on that node. Essentially Portainer needs access to its data from whatever node it's running on. The cluster here provides an NFS common share to all nodes so this works fine here.

Initially Portainer data lived only on host8, meaning whenever the master moved, it pretty much became a new installation. While not being HA, the system is now more tolerant to software failures on the master controller.

Analytics

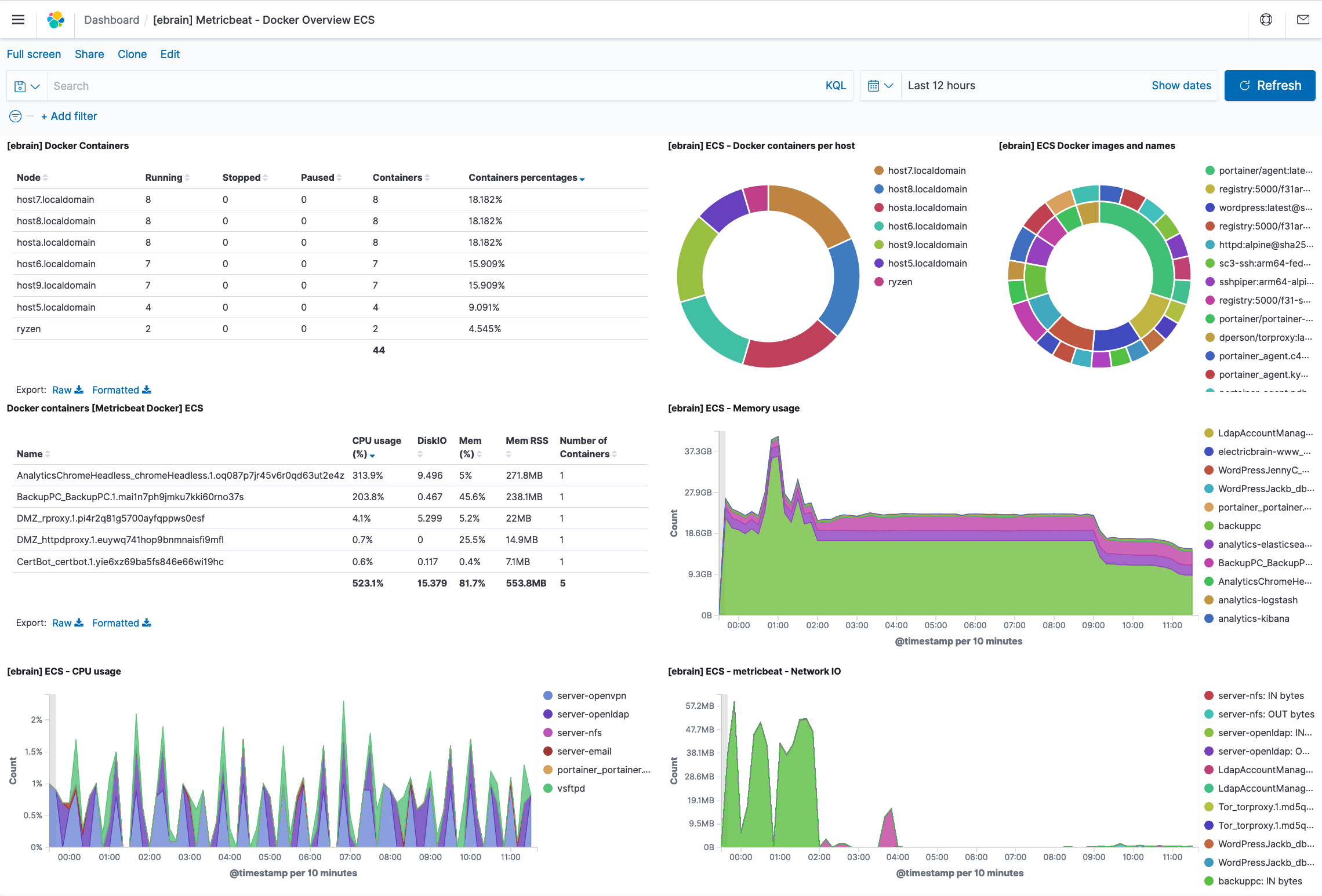

Portainer has a tiny amount of analytics built in, but no where near sufficient. Here at ElectricBrain the ElasticSearch (OSS edition) stack has been deployed to get a handle on what's going on.

Each (64 bit) node in the cluster has various Elasticsearch beat agents loaded which gather vital logging information. The log information is sent to the Elasticsearch cluster (one single node here) and where Kibana can access it for various performance monitoring and security dashboards.