Containerisation vs Virtualisation what and how

What is the difference between these two technologies. This has become an FAQ in the quest to understand the evolving landscape as dockerisation marches onward clearing all former technologies in its path.

As with all these technologies history is often the best teacher. Looking backwards can provide context to the current crop of what sometimes appear to be technology fads.

The ultimate goal of both technologies is to divide up the physical resources of a computer among several virtual computers which each virtual seeing the exclusive use of its domain. This is the foundation of cloud computing

Hardware Virtualization

The essential principle of hardware virtualization relies on interrupting the flow of a program whenever a "virtual" cpu attempts to execute any privileged instruction. Generally speaking privileged instructions are those that manipulate hardware. Mostly very low level input/output instructions to peripheral controller chips like a disk controller, network controllers, video cards or keyboard input from USB ports.

Anytime the virtual CPU attempts execute an I/O instruction to talk to a controller chip the Virtual Machine Manager or hypervisor interrupts the program flow, unbeknown to the running program, and takes peek at which instruction is being attempted and what I/O address it wants to communicate with. It then takes the data in the CPU registers and uses it in a software emulation of the target I/O chip. This is generally known as hardware virtualization and is very similar in concept to the classic hardware interrupt mechanism. The program that controls the hardware is generally known as a hypervisor and it runs at a higher privilege than its subordinate virtual machines such that it doesn't interrupt itself. In general parlance the interrupting of program flow upon executing a privileged instructionis called a trap.

By using hardware virtualization lots of copies of an operating system can each run on a single CPU with each instance believing it is the only one. Furthermore, one physical computer can run many different operating systems at the same time. The contention for hardware access is coordinated by the hypervisor. Clearly, however, emulating hardware with software is a time consuming process and there is a performance penalty associated.

80286 16 bit CPU - 1982

Intel's first foray in to the area of virtualization was made in 1982 with the 80286 which was capable of running multiple virtual 8086 CPUs.

8086 16 bit CPU - 1978

The original 80286 processor ran at 4MHz or roughly 1000 times slower than today current CPUs. Not only that, the chip only contained a single CPU, whereas today almost all CPU chips are at least quad core. Unfortunately pretty much the only operating systems that ran exclusively in 8086 16 bit mode were CPM/86 and the far more popular MS-DOS.

Hardware virtualization was something of a breakthrough back in the day. Before that full software emulation of every CPU instruction was used. Needless to say that was extrememly slow. It's still possible to run emulations today with the aid of program environments such as QEMU. In fact there are instruction here detailing how to run an virtual octocore Raspberry Pi on your PC using QEMU software emulation.

Early Software containerization attempts

One of the first attempts at containerization style isolation and resource management was User Mode Linux. UML was originally available as a patch for the 2.2.x kernel (circa 1999). Once patched the kernel was able to run as a userland application. The significance of this is that a Linux kernel ifself effectively became an ordinary program that any user could run. The UML program could then run other Linux programs. It became possible to effectively run multiple "virtual" machines on a single host.

Due to various inefficiencies it turned out that patching the host kernel to have Seperate Kernel Address Spaces (SKAS patch) sped things up quite considerably. This was implemented in kernel version 2.6+ released in 2003. In the day this author was able to deploy multiple UML VMs on to dedicated servers around the world quite successfully, and in doing so upset the business model of hosting centers which was predicated on one computer one OS. UML, however, was designed to operate on the original 8086 16 bit instruction set.

Xen

Next came Xen. With Xen came the advent of the "paravirtualized" virtual machines. Essentially instead of "trapping" hardware I/O instructions attempting to communicate with I/O controllers, Paravirtualization sought to catch proceedings at a much higher level. Instead of catching instructions being issued to a disk controller one at a time by means of traps and then pretending to be that hardware controller, Xen sought to exchange the entire device driver. The reasoning is that the only software component ever speaks to the disk device driver and that's the file system. By capturing the whole block being sent at the driver level it's possible to queue the block with lots of others from other virtual machines. All the blocks can then be managed by the XEN block driver. The only restriction being that all virtual machines had to run the same or sililar operating systems that can detect paravirtualization. The other advantage was that the CPU didn't need to support the hardware virtualization mechanism at all. Nevertheless Xen added in fully hardware virtualized support for those remaining OSes that couldn't use Paravirtualization.

One the the biggest proponents of XEN and the Xen Hypervisor was of course AWS.

![]()

In September of 2020 Xen hypervisor was made to run on the Raspberry Pi 4B with 8GB of ram. In the article Xen on Raspberry Pi 4 adventures the authors describe the contortions executed to get it running. These included upstream kernel patches and changes to Xen itself.

Containers, Docker, Kubernetes

Finally, namespace isolation was built in to the kernel. Namespaces are similar in nature to the Linux command chroot however the concept is extended to 6 other types of resources. The chroot command in Linux allows the root user to launch a commandline with the apparent top level filesystem directory located at some place in the real file system. The captive commandline (or whatever program was launched) can't escape, hence the oft seen term "chroot jail", and so is confined to an area of the disk. The kernel enforces the program's view of the disk, avoiding even driver level optimizations and paravirtualization. Extending this to networking, userids, inter-process communications, process ids, etc gives rise to technologies like Snaps, Docker, Kubernetes and like container technologies.

Again a key advantage of containerization is the CPU doesn't need to support hardware virtualization. Tiny computers such as the original Raspberry Pi Zero with its 32 bit CPU can run Docker.

Full virtualization on ARM64 A72 CPU with processor virtualization extensions

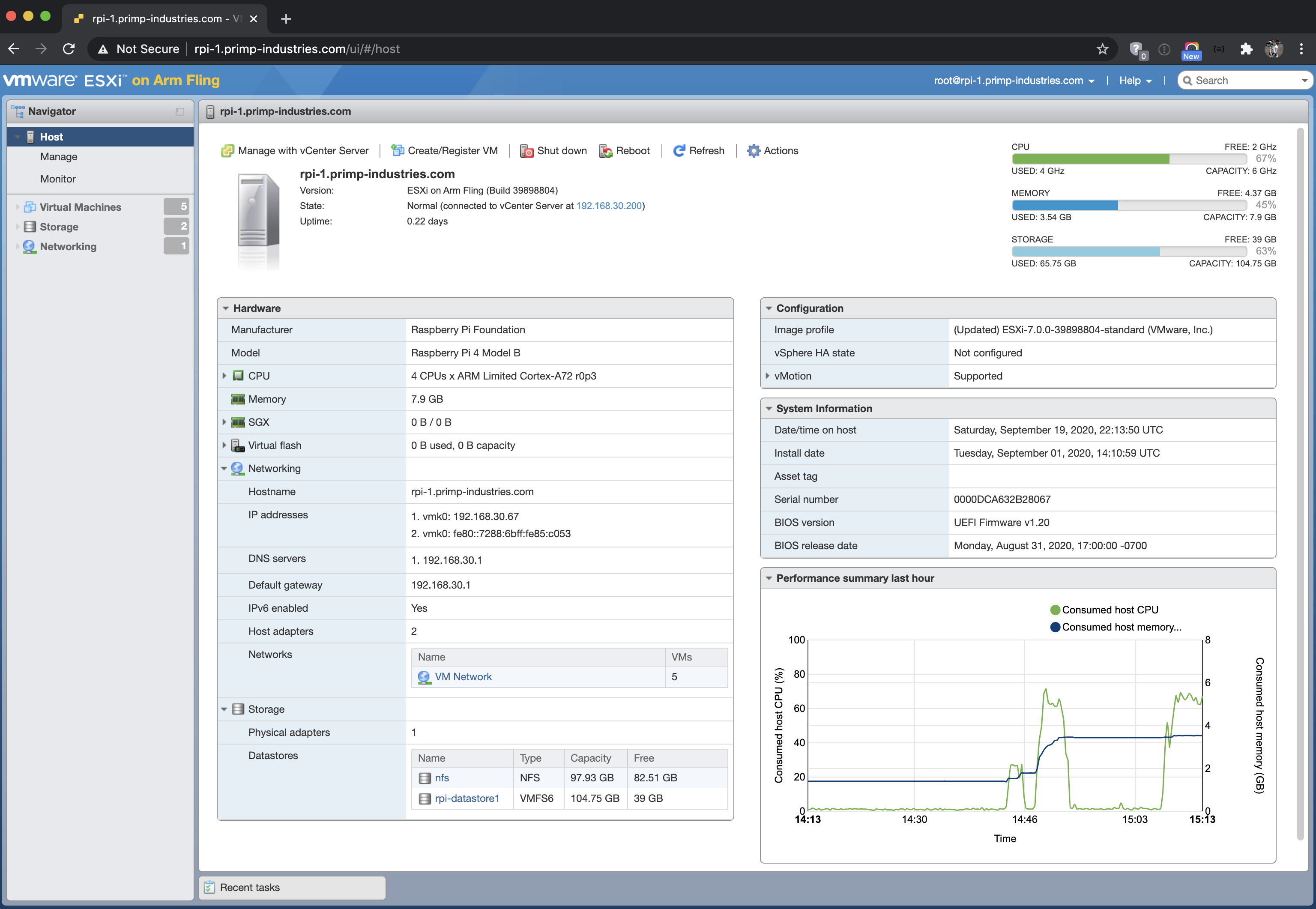

More recently VMWare's ESXi's arm edition ( December 17, 2021) has made an appearance for Raspberry Pi. This implementation fully virtualizes and emulates a console/keyboard as well as a default NIC. Virtual disks are also implemented. Starting out life on the data centre class Graviton CPUs VMWare has trickled down to the Pi.

All this is made possible by the ARM64 CPU Virtualization extensions included in the A72 version of the CPU.

The advent of full virtualization on the Raspberry Pi now means entirely different and otherwise incompatible operating systems can now co-exist on the same bare metal. In theory Linux and Windows should be able to operate on the same Pi.